Welcome!

I’m a staff scientist for Oviedo Lab, an electrophysiology lab in the department of neuroscience at Washington University in St. Louis (WashU). I’m also an associate member of the Centre for Philosophy of Memory, researching the role of memory in perception and the phenomenology of episodic memory. Previously I was a lecturer in the department of philosophy and the philosophy-neuroscience-psychology program at WashU, a postdoctoral visitor with Harris Lab at York University studying the integration of auditory and proprioceptive feedback in the control of movement, and a postdoctoral research fellow at the Network for Sensory Research in the Department of Philosophy, University of Toronto. I completed my Ph.D. in philosophy at Rice University (Houston, Texas), where I also was one of the coauthors of the program’s textbook in mathematical logic.

Computational Neuroscience

My work focuses on three projects:

- Neuromorphic circuits for speech recognition, especially as inspired by firing-rate and membrane current models of auditory edge detection.

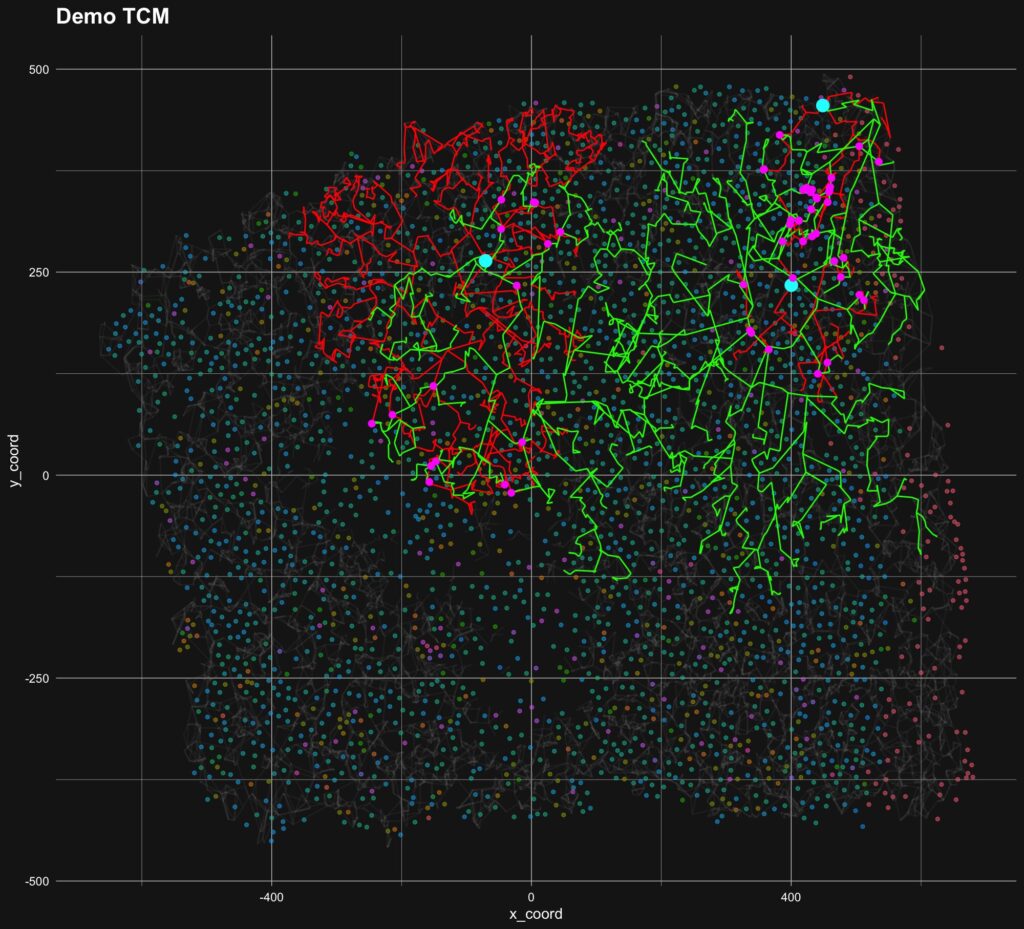

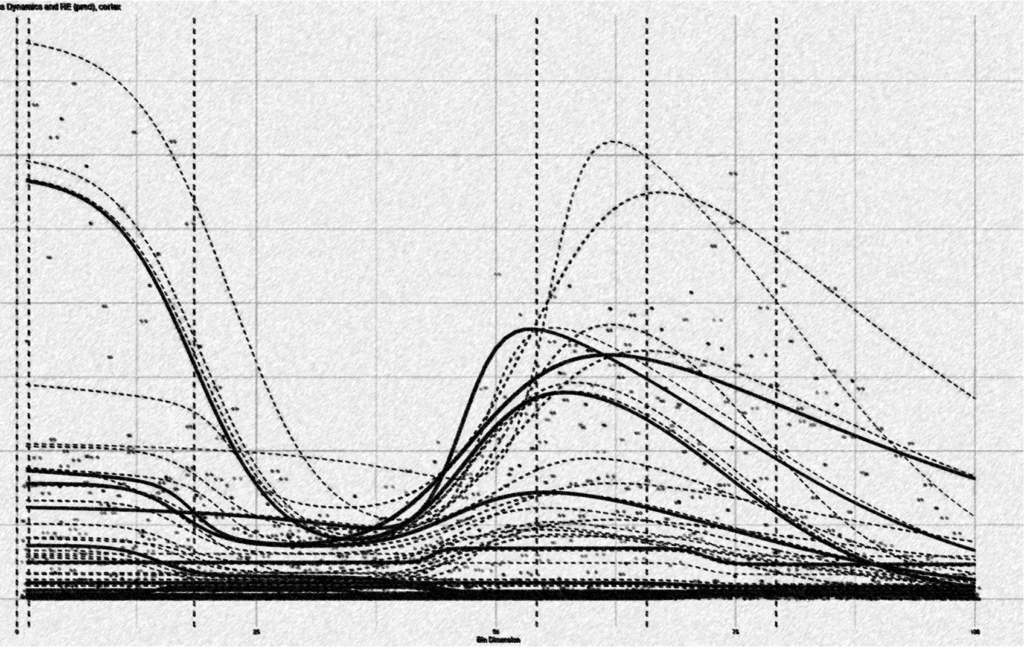

- Molecular mechanisms behind auditory cortex lateralization, especially developing nonlinear mixed-effects model of gene transcript density across the cortex using spatial transcriptomics data.

- Lateralized recurrent pathways in auditory cortex, including quantifying recurrence via measurements of exponential decay constants in excitatory neuron spike autocorrelation.

These projects live in two R packages:

- neurons: High-performance C++ objects for modeling neurons and neural circuits, including (or soon to include) tools for estimating auto- and cross-correlation, building spiking neural networks, and transcriptomic circuit mapping. [documentation]

- wispack: Implements warped sigmoidal Poisson-process mixed-effects models, a tool for testing for between-group effects on the spatial distribution of genes. [documentation] [preprint]

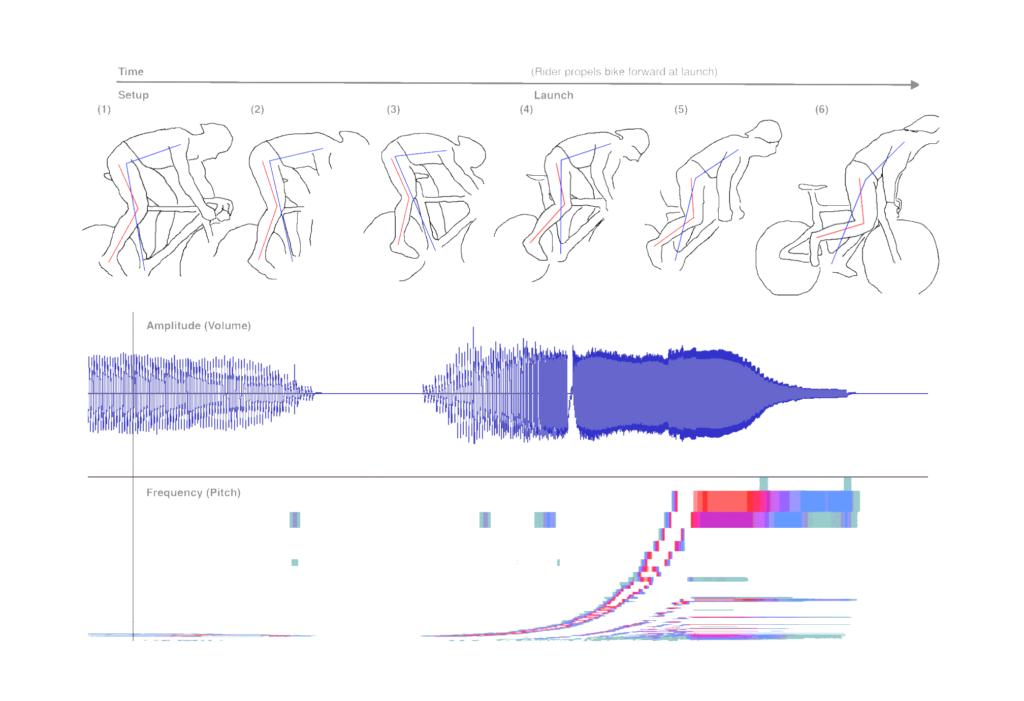

Movement Sonification

Before my transition to neuroscience I did behavioral psychology experiments on how auditory feedback can augment, replace, and enrich natural proprioception, improving motor learning and motor control in fast, skilled movements. This work involved developing ultra fast wearable embedded sensor systems for movement sonification. I worked with the Cortex M4 and ESP32, developed bare-metal digital sound synthesis techniques (two-timer pulse-width modulation, based off a class-D amplifier), used inertial sensors, designed bespoke PCB feathers (KiCad), and 3D printed enclosures (FreeCAD).

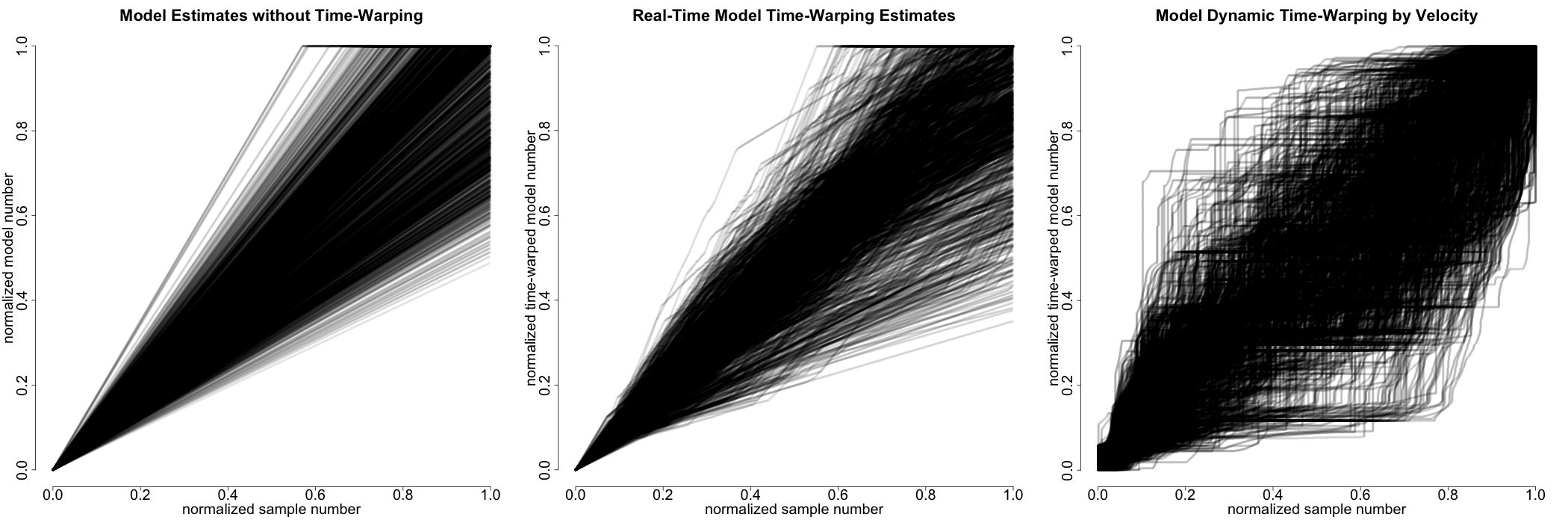

As part of this sonification work I wrote motion processing algorithms for both real-time processing in embedded hardware (C++) and for post-processing (R). This work involved motion detection and segmentation, coordinate transformations, input integration, path comparisons (error estimation), and time-warping (both post-processing dynamic time-warping and real-time online warping estimates).

Phenomenal Consciousness

In addition to my empirical stuff, I also do interdisciplinary research on how we subjectively experience the world. I’m particularly interested in how memory and sensory perception interact to afford consciousness of the past and present. Most of my work focuses on experiencing what’s not there (memories, dreams, hallucinations, VR), the feelings of presence and pastness, and the neural correlates of consciousness.

You might check out this piece and this piece I wrote on presence and digital fluency, or this paper, on how perception involves experience of the past. The paper was one of two runners-up for the essay prize at the Centre for Philosophy of Memory. I summarize the idea in a blog post.

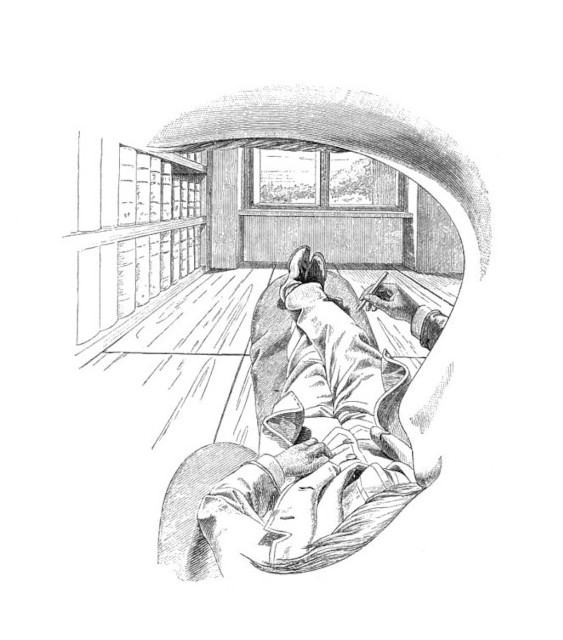

Image: Self-portrait by Ernst Mach, 1886

Coding Portfolio

Modeling Software

- 2025. neurons, high-performance C++ objects for modeling neurons and neural circuits, including (or soon to include) tools for estimating auto- and cross-correlation, building spiking neural networks, and transcriptomic circuit mapping. [documentation] (R package, Rcpp, C++)

- 2025. wispack, warped sigmoidal Poisson-process mixed-effects models for testing for functional spatial effects in spatial transcriptomics data. [documentation] [preprint] (R package, Rcpp, C++)

- 2024. Hidden-state Bayesian learning, simulated Bayesian reasoner who learns a reach target based on auditory feedback, intended for modeling sonification data. (R scripts, C++)

- 2023. Generalized linear modeling (GLM), demo of task-dependent somatomotor cortex responses from simulated fMRI data. (Python, CoLab)

- 2023. Heuristics-based physical symbol system simulation, instructional demo, algorithm solves a version of the river-crossing problem. (Python, CoLab)

- 2022. Two-pivot reach model for tracking position through Cartesian space from raw gyroscope readings. (C++, Arduino)

Statistics and Data Analysis

- 2025. Bayesian MCMC parameter estimation, instructional demo on how to use Markov-chain Monte Carlo (MCMC) simulations to estimate parameters with Bayes’ rule. (R scrip)

- 2024. Kinematic data analysis, full pipeline, data synchronization, signal filtering, linear mixed-effects modelling, and nonparametric bootstrapping, used for post-processing and statistical significance testing of kinematic and accuracy data (optical and inertial) from motor control study involving reaches with movement sonification. (R scripts)

- 2023. Sentiment analysis with deep neural network, instructional demo performing sentiment analysis of IMDb movie reviews with explanation of network opacity. (Python, CoLab, PyTorch)

- 2023. Linear decoding (logistic regression) of motor tasks from simulated somatomotor cortex fMRI data. (Python, CoLab)

- 2023. Single-layer neural network (McCulloch-Pitts Neuron), instructional demo on learning with the Perceptron Convergence Rule. (Python, CoLab)

Hardware and Embedded Systems

- 2024. Movement sonification hardware code for real-time embedded (wearable) sensor system which tracks motion via inertial sensors at 1kHz while providing auditory feedback with only 1–2ms latency. (C++, Arduino, ESP32)

- 2021. Two-timer pulse-width modulation (class-D amplifier) for low-overhead bare-metal digital sound synthesis on single-core embedded processors. (C++, Arduino, Cortex M4F, ESP32)

Interested in chatting about human perception or movement sonification?